The Role of the Developer in the New Network

The infrastructure developer/architect will be a key player in the new network

Cloud and virtualization are imposing a heavy burden on the infrastructure supporting applications. The "new network" necessary to support the dynamic environment in which applications are rapidly provisioned requires that network components -- from switches to firewalls to load balancers -- be not only dynamic, but able to collaborate with the broader management ecosystem. We all know what the word "collaborate" means to applications and it's no different for network components -- integration.

Network components will need to integrate with applications to improve scaling decisions, with each other to react to changes to applications (launch, decommission, scaling), and with the orchestration systems that will automate many of the tasks typically accomplished manually by network and systems' administrators.

The means to achieve such integration exists for most components, but it is as a developer would expect: programmatic. APIs exist to allow remote management and control of network devices (virtual and physical) and it is almost certainly the case that someone will need to leverage those APIs to achieve a dynamic infrastructure.

A potentially new role within IT is emerging, one that focuses on network and infrastructure components rather than applications: infrastructure developer.

The Need for the New Network

The reason an infrastructure developer will be needed is related directly to the need for a new network. Virtualization and cloud computing ultimately require new kinds of interoperability to reduce the burdens imposed by these technologies. What's really problematic is that this can occur many times over a short period of time, and in a large-scale environment can be occurring for myriad applications at the same time. Massive amounts of information about policies, locations, and infrastructure must be shared over the same network, at the same time. Components must be updated, configurations changed, policies applied, and it has to happen in the right order. The scalability of such a dynamic environment is inherently limited to a single bottleneck: manual configuration and management.

The new network needs to enable the means by which a dynamic infrastructure supporting multi-component/tier applications can be automatically discovered, rapidly provisioned, scaled, secured, managed, modified, and migrated across disparate locations. Without automation these operational processes take far too long to be called "rapid" and are inherently error-prone as people miss steps or mistype a command.

It's not just about starting up and shutting down applications. A dynamic infrastructure must be able to react on-demand to events and changes in the environment. As policies governing the delivery of applications change, as the applications change themselves, when attacks threaten availability and security, the underlying infrastructure must be capable of adapting and responding with as little manual intervention as possible.

Why Is This So Complex?

Consider the series of events that occurs when an application is first deployed anywhere. As a developer or architect, once the application is deployed into the production environment and verified to be working, it's time to shift the burden to the network team. This is often referred to by networking folks as "throwing the application over the wall" because the line of demarcation between networking and application development is long and wide and, like a castle wall, not meant to be breached.

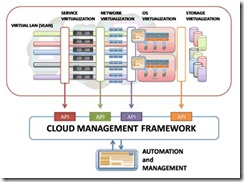

Figure 1: Abstracted diagram of integration of cloud framework with infrastructure component APIs.

The network team has to concern itself with firewall policies, routing, switching, load balancing, network-hosted security such as IDS, IPS, and web application firewalls, as well as any unique application related solutions such as application-specific acceleration, optimization, or resource obfuscation. Each policy is a potential point at which an error might be introduced, or a step missed, leaving applications and corporate resources vulnerable to exploitation. When a second or third instance of the application is launched to support capacity on-demand, the load balancer must be updated and any application-specific policies might also require modification. Again, this is generally a manual process. Most of these processes are transparent to the developer today. In a dynamic, cloud computing-style architecture, however, these processes become automated and are driven by a framework: and that framework requires integration and control over the comprised infrastructure components.

It will become the responsibility of infrastructure developers to enable this automation through integration of networking components into the automation frameworks necessary to scale such dynamic architecture effectively.

Integration Required

The defining, programmatic attributes of Web 2.0 are interactivity and collaboration. The former is enabled by AJAX, the latter by the ubiquitous nature of HTTP and the emergence of RESTful APIs. Like Web 2.0, Infrastructure 2.0 is about collaboration and interactivity, both enabled via the existence of APIs -- often referred to in the infrastructure realm as a management or control plane.

These APIs are standards-based, primarily SOAP but a few emerging as RESTful as well, and provide the means by which infrastructure components like load balancers and switches can be controlled dynamically. These very granular APIs allow external entities, like a cloud or virtualization management framework, to configure, manage, and monitor infrastructure components. These APIs further provide the means by which the components can be integrated into the automation and orchestration frameworks necessary to enable auto-scaling capabilities.

These APIs might be used to add or remove application instances to a load balancer in a dynamic environment in order to automate scalability of the application. APIs further enable the ability for external systems to retrieve statistics and data regarding the capacity and usage of supported applications to enable better decisions regarding delivery and scalability in the environment.

These are not plug-n-play APIs nor are they service-oriented in the SOA sense of the term. In other words, they are more akin to SOAP wrappers around a complex and granular SDK than they are the business -- or task-related functions generally associated with a robust service-oriented architecture. It may take many API calls to perform a single task because of both the nature of network components and the way in which those components have exposed their control planes.

For example, Virtual Local Area Networks (VLAN) are commonly used to isolate and segment customer traffic within an off-premise cloud computing environment. To create a VLAN on a network device takes several steps:

- The VLAN must be created

- Any required routing must be configured

- Ports must be associated with the VLAN

Most network components require multiple configuration "steps" to complete a single task and these steps must be mirrored when automated through their respective APIs. Cloud management frameworks today, the ones which developers may interact when deploying an application in an off-premise cloud, are highly abstracted already. All the steps necessary to perform a specific task such as "create a new VLAN" are encapsulated in a single, cloud management framework API call.

Consider as an example Amazon's Elastic Load Balancing (ELB) and GoGrid's load balancing services. Both enable customer control of the underlying load balancing infrastructure through abstracted and simplified cloud framework APIs, making integration for the customer much more lightweight than the actual integration occurring under the cover of the cloud. The integration hidden from the customer is much more complex, taking advantage of the verbose APIs offered by load balancing solutions today.

It is this latter integration -- the more difficult of the two -- that becomes the responsibility of the organization when an on-premise cloud computing initiative begins and it is this integration that will require the expertise of developers well-versed in integration and standards-based APIs as well as core networking concepts.

Infrastructure Integration

This new role -- infrastructure developer -- is going to require skills with which most developers are likely (unhappily) familiar: integration. It will also require skills with which most developers are likely unfamiliar: infrastructure APIs and behavior.

This will require developers to become familiar with the integration capabilities, i.e. the APIs, offered by network and application delivery network solutions as part of the acquisition process. Infrastructure architects will need to understand the impact on the automation architecture of introducing new solutions and become an integral part of the decision making process for such solutions. Infrastructure architects and developers will need to expand their knowledge base of networking beyond IP and HTTP and become more familiar with some of the key networking concepts related to on-demand architectures to integrate relevant components into an enterprise automation framework.

It is through an enterprise automation framework that agility will be achieved and efficiencies gained from emerging technology such as cloud computing and virtualization. The infrastructure developer/architect will be a key player in the successful implementation and deployment of such frameworks. The role of the developer in the "new network" is key to its implementation and provides a green field of development opportunities for those brave enough to explore the complex, new realm of infrastructure integration.

Source: https://www.drdobbs.com/architecture-and-design/225800076