Now the Lab Management part of the Dogfooding story ….

In my last post I talked about how the Visual Studio Test and Lab Management teams are dogfooding those products – that is, as we enter the home stretch of the VS 2010 release, we are using the very same tools we are building, to test and sign off on the release. We are using the RC bits, and these bits will be broadly released very shortly. My team has already blogged about all of the changes that have gone into the RC version of the testing tools – you can read about those here.

In this post, I will focus on how we are dogfooding the Lab Management product, and are benefitting from it. This really highlights all of the coolness that you can expect to see, as you use the product too. Please refer to the last post, about the infrastructure and topology that essentially makes up “lab” that my team has setup using the Lab Management product.

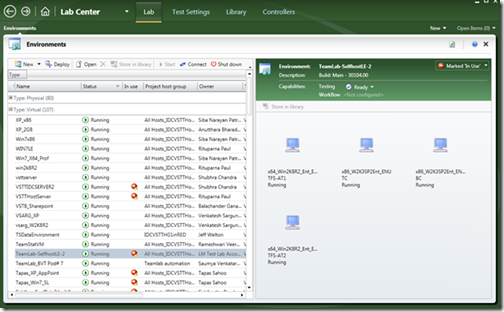

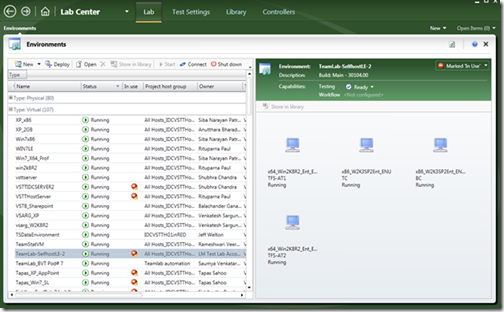

The first diagram that I include below shows you a view of the lab in the “Lab” activity center of the Microsoft Test Manager 2010 tool.

You can see that, in this instance, the team is using 107 virtual environments for their testing (aside from another 80 physical environments). Each virtual environment is a logical collection of a set of virtual machines that make up the environment or configuration. For example, you can see that the highlighted environment is made up of four virtual machines (the view on the right side of the tool).

While this post is not about individual features in the product, let me take a moment to talk about virtual lab environments and what you do with them …

Setting up these virtual environments is really easy – we have blogged extensively about this at the team blog site. You can create a virtual environment by selecting a set of VM templates from the library. In the RC release we have added one more neat capability which allows you to compose a virtual environment from VMs that you may already have running on the host. Many of you, I am sure, are already using virtualization in your teams and likely have VMs (virtual machines) already setup. You simply need to install a few agents on those VMs and, with the RC release, can use this new compose feature to create a virtual lab environment. As part of the creation of the environment, you can also specify that you want the capabilities to automate tests and deployment of applications (for example, the applications under test) on to those environments.

Once you have created the environments, you can use the full repertoire of testing capabilities that we provide in this release on the environments. That in fact, is how we have been doing all of the testing that I talked about in the last post.

Getting back the diagram shown above – I want to highlight one specific benefit that I myself have experienced because of this dogfooding. While we have been building this product for the last couple of years, it was always a bit difficult to get a full view of all of the configurations and environments that my QA teams were managing in the physical labs. Now, with our product, I get a complete view of all of this from my own desktop, using the Microsoft Test Manager 2010 tool! This is just awesome for me as a manager. In the above view, I got to see the different types of environments that the team is using, and what makes up those environments. I can have multiple views on this.

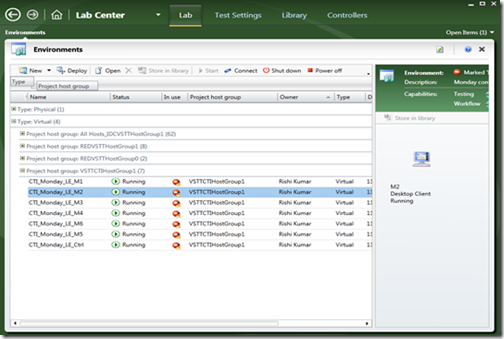

The view below allows me to see which host groups the environments are part of …

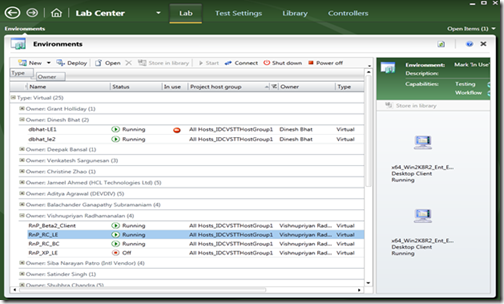

… or I can view of which tester owns which environments

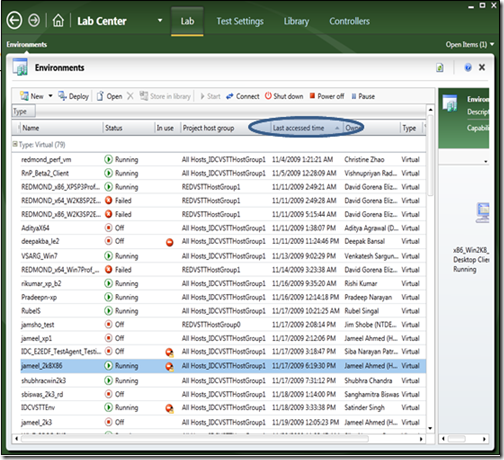

In another view, shown below, I can see when the environments were last accessed (I am using a screen shot from middle of November). This gives me a great sense of about the resource utilization in the lab.

The seamless integration of the Lab Center with the Test Center in the Test Manager tool is a very powerful interface to keep track of all aspects of what’s going on in your test labs, and I have personally enjoyed this myself.

The build-deploy-test capability of the product has also been a great hit with the team. This essentially allows you to define a workflow which can do the following:

- Schedule a build for the application under test. Once the build is done,

- Select a lab environment on which you want to do some testing using the latest build

- Restore the environment to a known good checkpoint (guaranteeing a clean state to begin with)

- Deploy the bits of the application under test onto the (multi-tier) environment

- Take a post-deployment snapshot, so that testers can return to a clean starting state for testing

- Automatically run a suite of tests on the above environment, and report results

A new build of the application is really at the heart of the entire development process. An ability like above takes away all of the inefficiencies and hand-off errors around the build, and will be extremely popular in development teams (for both testers and developers – who will use this mechanism to do their own types of testing on production-like environments).

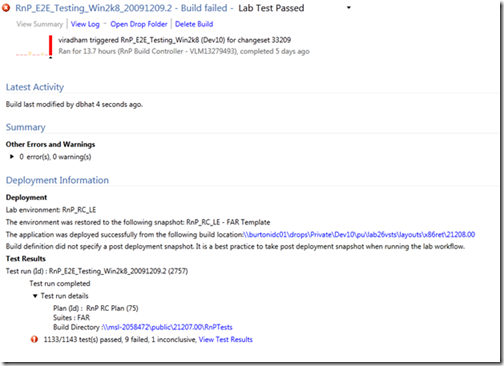

The Lab Management product extends the Team Build functionality in TFS (accessed from Team Explorer) to provide the above capability. Below I show an example of a feature team using the above feature. Notice the “deployment summary” information page that is presented at the end of the workflow – it summarizes the various steps of the workflow and the results.

At this point, I must also highlight that the ability to customize the build-deploy-test workflow is an extremely powerful capability. You can automate various kinds of testing needs using this. My team has blogged about this here. The power of this was particularly evident in our own dogfooding experience. Remember, when we are dogfooding, the “application under test” is really VS 2010 and the same testing tools that we are using. So, the event of a new build being generated becomes particularly challenging for us. As part of the deployment workflow, the same agents which are orchestrating the workflow need to be upgraded to a newer version that is part of the new build! Doing this is no easy feat, for it needs reboots in the middle and a newer version of a component to complete a workflow that was initiated by the older version. And all of this is done automatically when a new build comes out! The test team has done a good job generating the scripts and commandlets to customize the workflow, and I was very happy to observe the flexibility and power of this feature in our product.

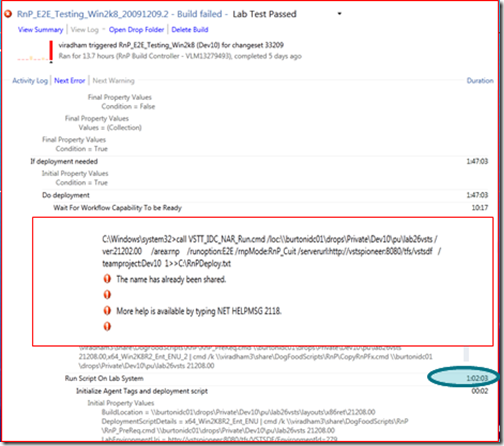

The diagram below also shows the power and flexibility in debugging where the problem is, when a workflow fails – particularly when the flow spans multiple machines, as it did in the above case. The logs generated as part of the workflow are extremely detailed, and organized in a nice fashion (highlighting the different steps, the time they took, and the warnings/errors generated) all in one place. This is a big improvement from the situation where testers have to manually look at logs in different machines individually to see what might have gone wrong. Here all of the information is in one log that you can access from your desktop, as part of the workflow summary information.

Features like noting the total elapsed time against each of the steps of the deployment workflow also helps a great deal in making the testing process efficient. For example, as shown above, it really helps the testers isolate which part of the workflow is taking the most time and see if those need to be optimized.

Before I sign off on this story, I want to return for a moment to the first diagram in this post. I am including it here again.

Notice the highlighted environment – “TeamLab-SelfhostLE-2” and the set of machines shown on the right hand side which shows what the environment is made out of. You can see that this environment includes a load balanced TFS server, a build controller, and a test controller. This in fact is one of the environments that the Lab Management feature teams are using to test the Lab Management product. This essentially is a sort of “recursive” use of the product within itself – pretty neat, isn’t it?

I hope this post and the last one has given you a good sense of the power of the testing tools that we are building, and the extent to which we have been dogfooding them to ensure that they are really ready for our customers. This dogfooding has been going on for more three months now, and the product is looking amazing solid.

The RC bits of VS 2010 will be released shortly. Setting up a Lab certainly needs more commitment of time and resources than trying out client side only features, but you can get started with a lab of just a couple of Hyper-V capable machines to see for yourself the power of the product and how it will take out the many inefficiencies that plague a typical dev/test lab. I hope this story give you the impetus to get going on the product. I would love to see a diverse set of customers go-live with the product and share with us their success stories, before I release the product.

Cheers!