Why Containers?

The world has concluded that Docker containers provide valuable capabilities. Some argue that it is just another form of virtualization, while others suggest it streamlines application delivery. This short presentation will attempt to directly answer the core value proposition of containers.

Click image for full size

Slide 1: Why containers?

This is the agenda for this particular blog post. The interesting take away is that containerization predates cloud computing. You can trace its roots on the way back to 1979, as you will soon find out in this presentation. One of the key components here is understanding the relationship between containers and DevOps. We will begin by defining what a container is followed by a description of DevOps. From that point we can begin to understand how containerization has contributed greatly to the DevOps community.

Click image for full size

Slide 2: Overview of this post

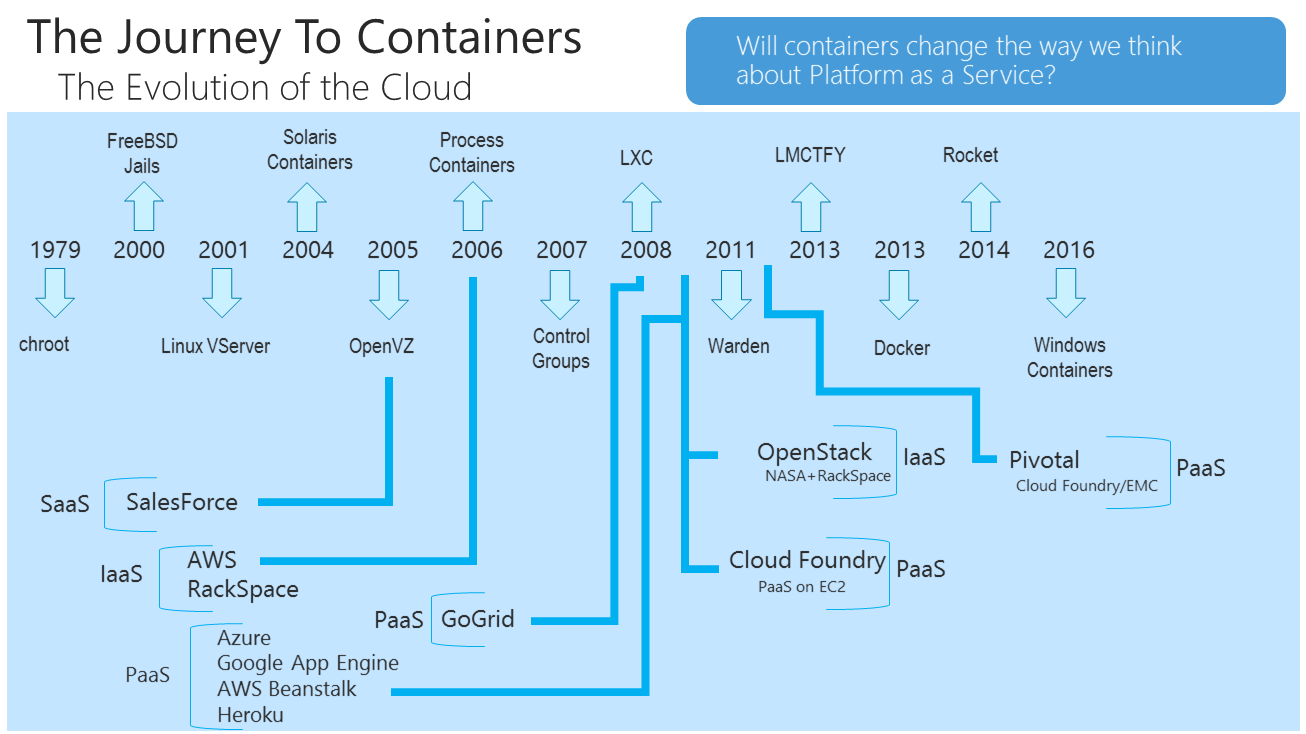

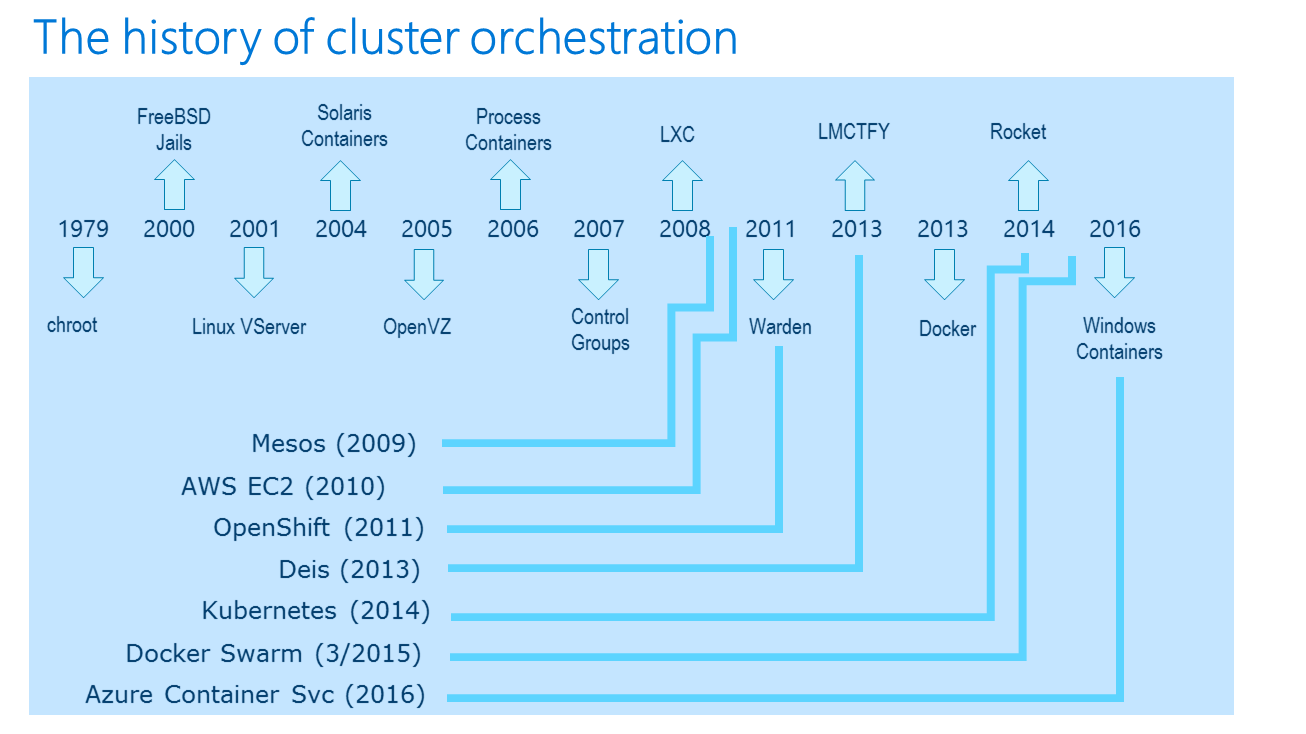

The really interesting take away here is that containerization actually started before cloud computing You’ll notice that some of the work with containerization was influenced by some of the technologies all the way back to 1979 In all the way in the early 2000’s significant innovation was occurring, particularly with Linux and Solaris But in 2006 Salesforce made a splash with software as a service This was at least two years later from the point that Solaris was investing heavily at the operating system level to define containerization.

It is interesting to note that cloud computing started with Software as a Service. This sort of application delivery model meant that there were no concerns for the underlying hardware for the maintenance of virtual machines. But this form of computing wasn’t the way traditional line of business applications ran in the enterprise. In order for enterprises to move to the cloud they needed to take their on premises applications that were running on virtual machines and run them in the cloud. That’s why Infrastructure as a Service became popular. But the maintenance of virtual machines represents a lot of manual labor. This led to the creation of platform as a service, a higher level of abstraction that allowed IT departments to think of the underlying infrastructure as a pool of compute power, not as a collection of virtual machines needed to be provisioned and maintained.

Later in this presentation will start talking about container orchestration. Before we go there will need to define what a container is and how it works.

But this slide should clear any misconception that Docker created containers. Docker did not come on the scene until 2013.

Click image for full size

Slide 3: The evolution of the cloud and containers

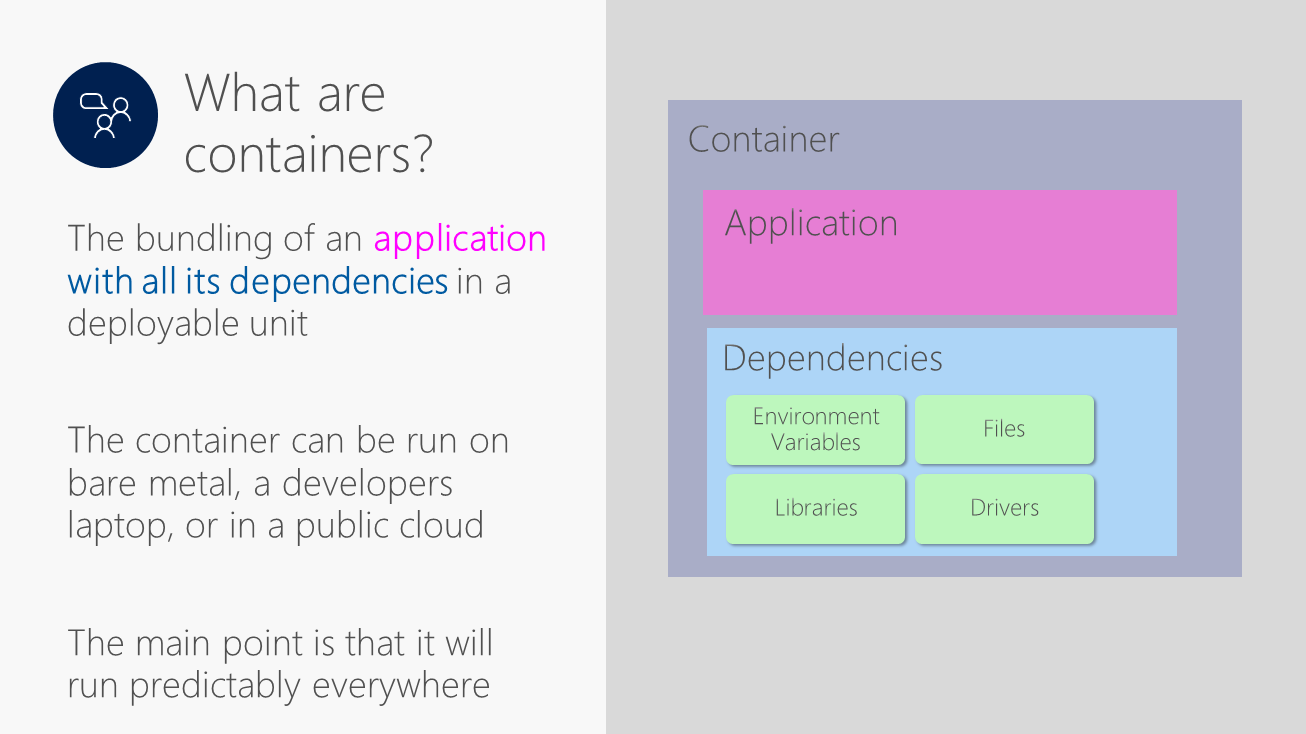

A good starting point is to describe what containerization means.

Conceptually, it is very simple It’s a way of bundling an application with everything that it depends on Historically, when deploying an application, IT operations had to make sure that all the application dependencies were present on the virtual machine where the application will be running. The fact that an application’s dependencies are bundled with the application itself makes it possible to deploy this application just about anywhere without any requirements about what exists on the destination computer.

Historically, IT administrators had to make sure that the virtual machine into which the application would be installed into, would have the necessary dependencies their a priori This complicated application deployments because it required IT administrators to always make sure the VM had the proper dependencies Overwriting existing dependencies might affect other applications running on that virtual machine.

The key take away is that if an application runs well on one computer, copping the container to another computer should also work reliably and predictably.

Another way to think about it is that everything you need is in one place, the application as well as everything else depends on. Reiterating once again, this means that application delivery is predictable and reliable.

Click image for full size

Slide 4: What exactly is a container?

DevOps has been around a long time and essentially speaks to delivering applications to users efficiently and with high quality. High-performing IT operations embrace DevOps, allowing them, in some cases, to deploy thousands of updates per day. It is well documented that frequent and high quality updates of software results in having happy customers and even happy employees.

Click image for full size

Slide 5: Containers make DevOps much better

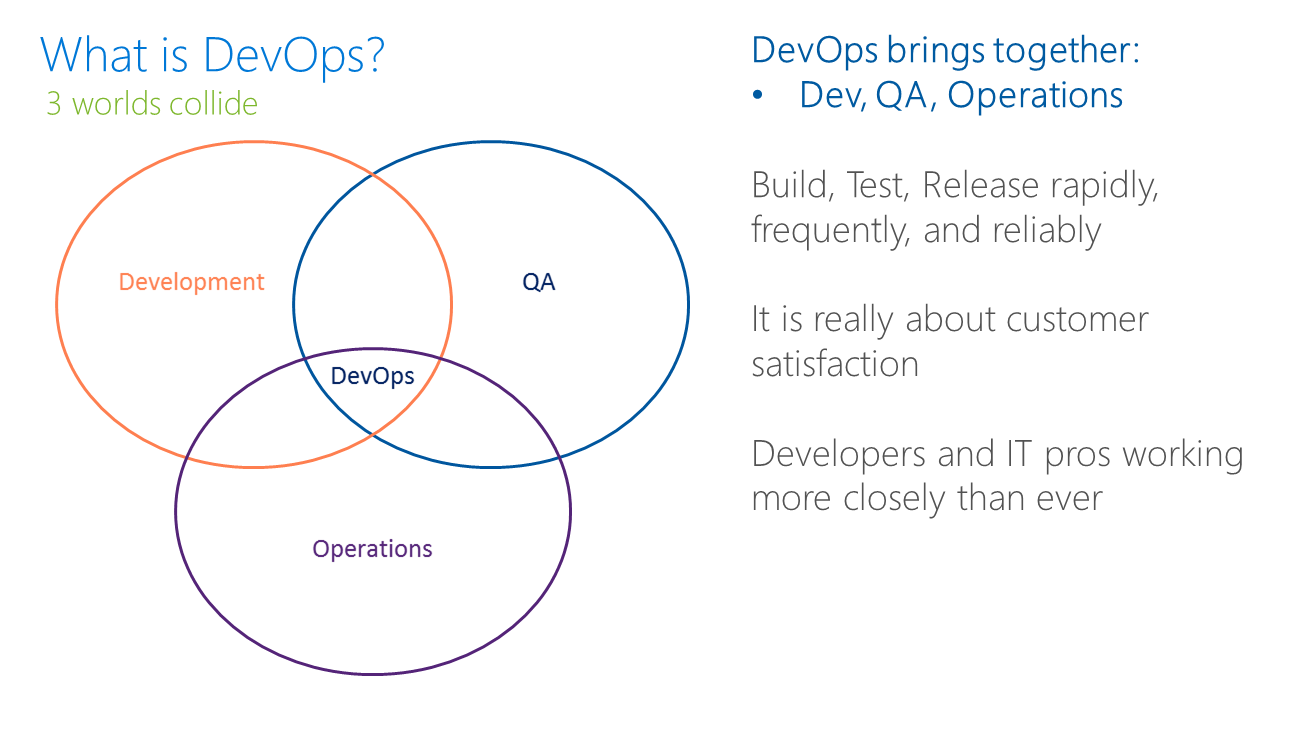

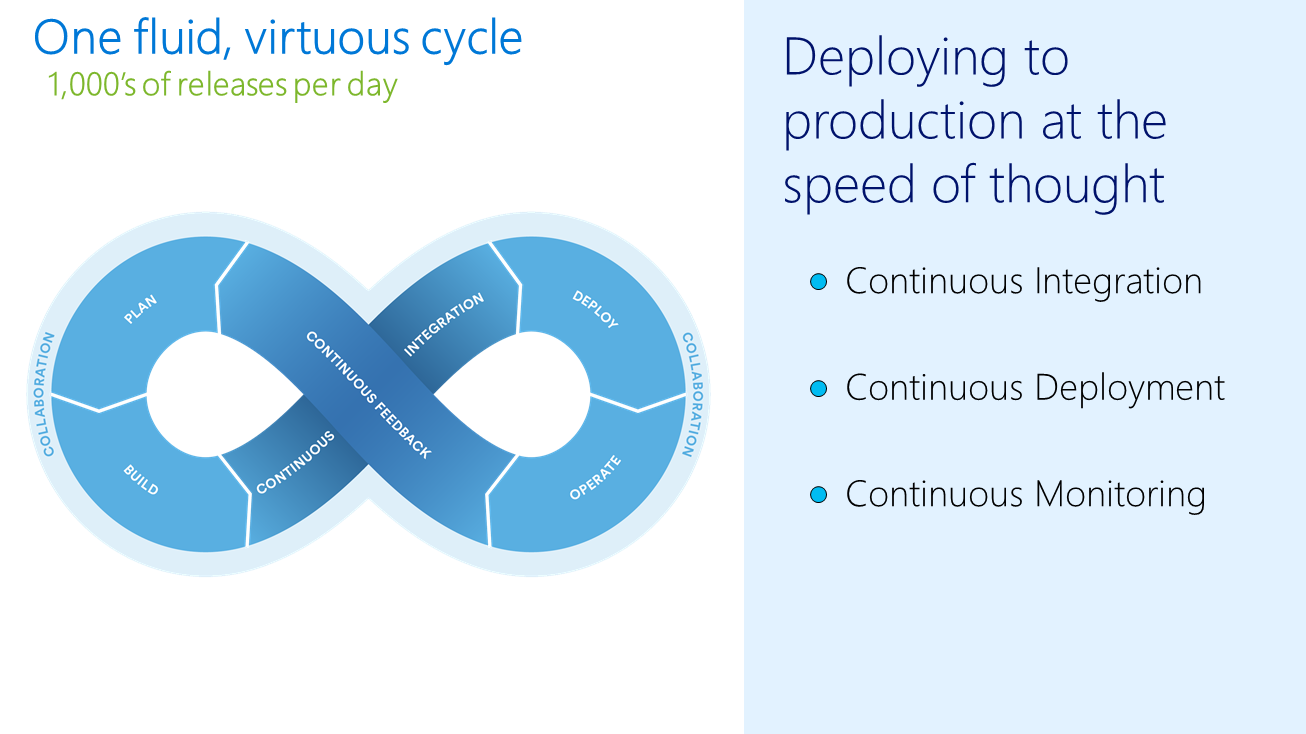

Drilling down more deeply into the meaning of DevOps is the diagram below

DevOps is all about streamlining the application delivery process, bringing together the worlds of a developers, quality assurance engineers, and IT operations. It’s all about building applications, testing them, and getting them into production quickly, reliably and with high quality. Ultimately this is about responding to customer need and giving customers exactly what they want in a timely fashion.

Companies that are good at this process can deliver application updates every 10 seconds throughout the day. In traditional scenarios it takes weeks if not months and longer to deliver application updates.

In this new model of application delivery updates can be provided in real time and during peak application usage without any downtime. This capability is increasingly important in web and mobile scenarios. More broadly it is relevant even for internal LOB applications in enterprises.

Click image for full size

Slide 6: The intersection of development, quality assurance, and IT operations

Containerization plays a crucial role in enabling this rapid application delivery The main reason is that containerized applications run more predictably as they are moved through the application delivery process, from a developers laptop, to the testing environment, to the staging area, and ultimately to production.

It is easy to think about scenarios where this might be important. Imagine a company that lets you submit your tax returns electronically. During peak tax season the ability to quickly and easily make updates to the live software is critical because money is on the line for the end user. Also imagine medical scenarios where life-and-death decisions are being made by software.

Imagine point-of-sale and retail scenarios where promotions or loyalty programs require frequent update.

There are countless examples that point to the single fact that optimized software deployment is essential.

Click image for full size

Slide 7: It is about frequent updates

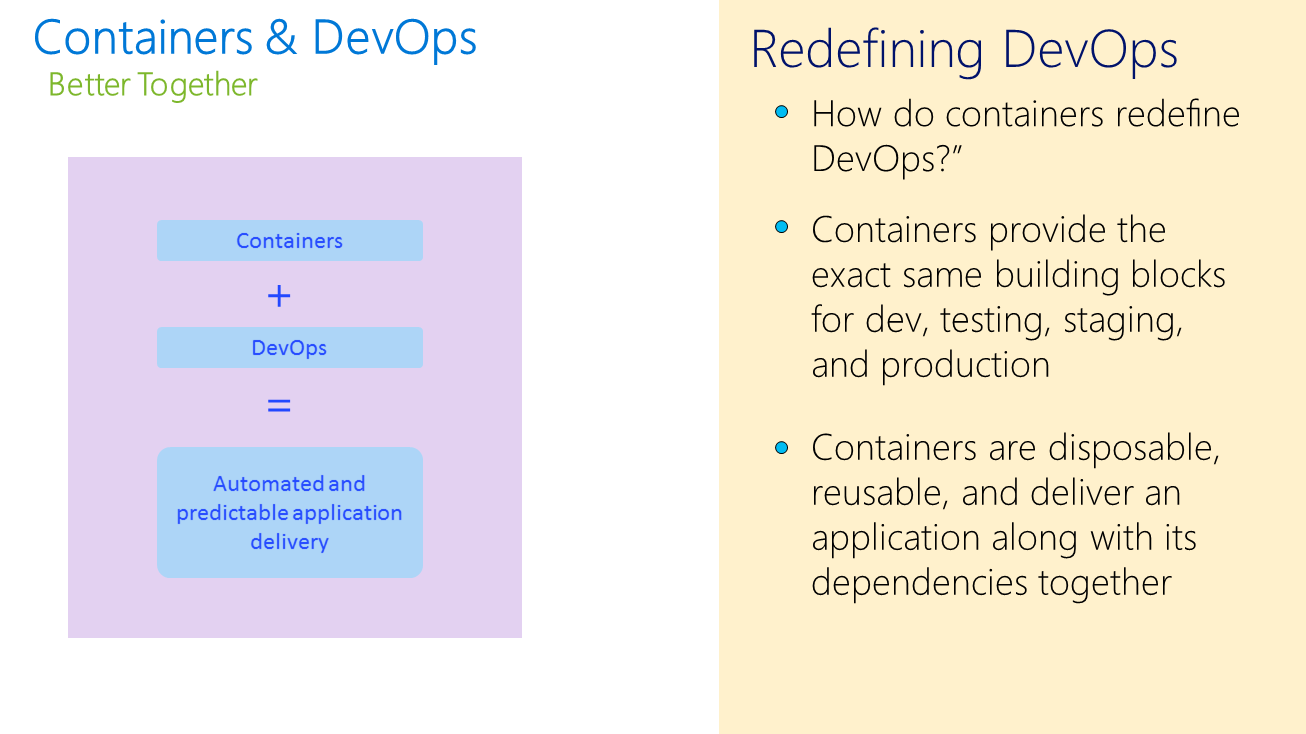

It should come as little surprise by now that containers have dramatically improved the company’s ability to improve their approach to DevOps It allows a developer to include all the important dependencies of the application being developed That means when the developer checks the code in, a series of automated steps take place.

The application is compiled, bundled up with its dependencies, and transformed into a runnable container that is automatically deployed into a testing environment, whereby a series of unit and functional test can be executed to validate application correctness. If the application makes it through the testing process, the next natural place for the application to be moved to is the staging environment, where some of the final sanity checks are applied to validate that the application is ready to move to production. All the while, containerization is what enables the predictable delivery of the application across all its environments, including developer, testing, staging, and production.

When the application is ready it can be deployed to production, once again inside its container, ensuring that the way the application will behave in production is the exact way that it will behave in testing and even on developer’s computer.

Click image for full size

Slide 8: DevOps made better with containers

The main take away from this post is that containerization is playing a central role in the world of DevOps Let’s now forget that widespread use of containerization is only a few years old.

DevOps pre-dates the world of Docker containers. But with the advent of containerization, DevOps is undergoing a dramatic change, a change that is improving the ability for companies to deliver application updates frequently and with higher quality.

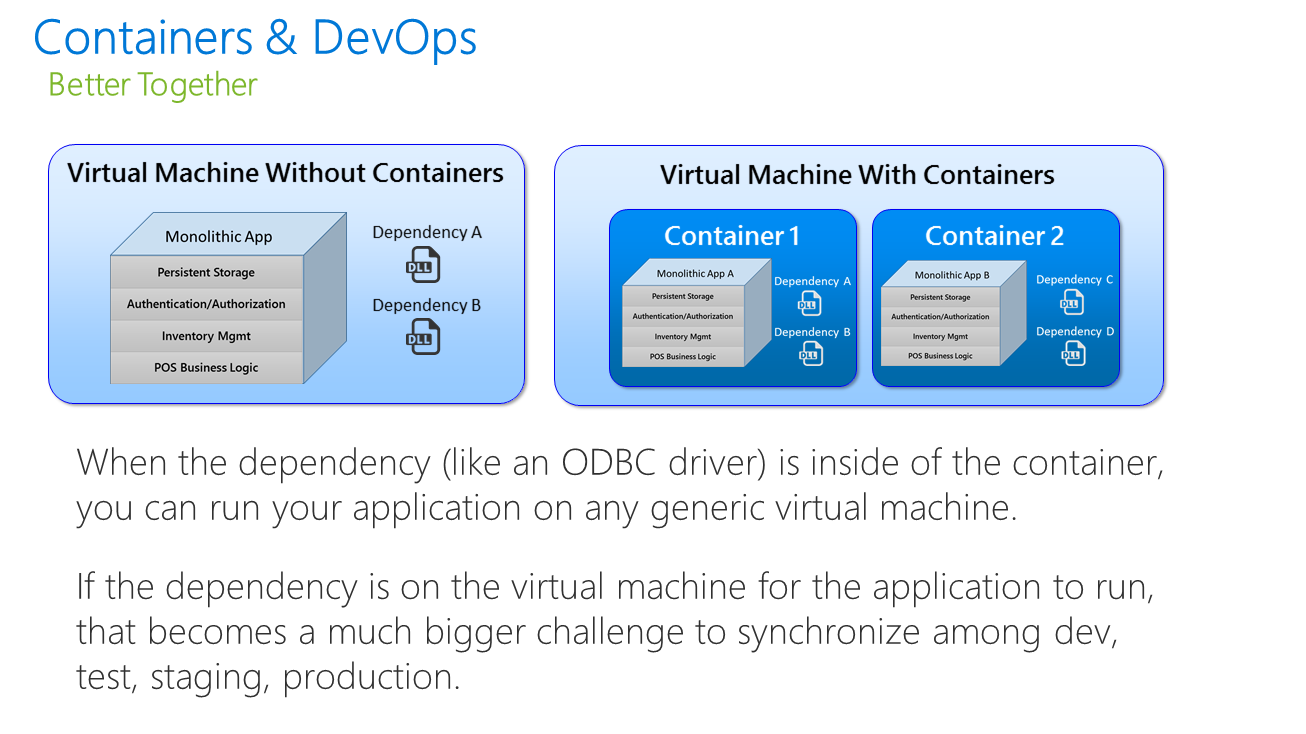

Containers also make it possible to deploy applications on a generic virtual machine that does not to be preconfigured to support that application. This provides more flexibility because an entire pool of VMs can be treated generically, making it possible to leverage any of the virtual machines, not just ones that are prepared to accept a specific application.

Click image for full size

Slide 9: The application behave the same way and development, testing, staging, and production

On the left side

The diagram below depicts a scenario on the left whereby the dependency for an application is installed on the virtual machine, not inside of the container. This means if there is a change in one of the dependencies (A or B), the application may fail because the application may be out of sync with the change in the dependency.

On the right side (containerized version)

If you notice on the right side of the diagram the dependencies are coupled with the application, such that any changes in the dependencies are automatically bundled with the application, allowing different applications to carry their own dependencies, virtually eliminating any impedance mismatch between an application and its dependency.

Click image for full size

Slide 10: What the virtual machine looks like with and without containers

A fully automated application delivery pipeline is the endgame here. At each step in the process containers play an essential role. The core point is that all the dependencies of an application travel with it through this process. The other advantage here is that if any failures are discovered in production, it is very easy to roll back to a previous version. It’s a simple case of stopping the container in production and running the prior version, which is already sitting there and just needs to be run, taking up less than a second typically.

Click image for full size

Slide 11: Streamlining the DevOps workflow

The standard vocabulary in this world include such words as continuous integration, continuous delivery, and continuous deployment, not to mention continuous monitoring The traditional approach to releasing software now means that it is always being delivered, that releases are taking place all the time, in one fluid work stream.

The key takeaway below is that there is a constant flow of application delivery. As changes are made, they are tested an rolled into production as quickly as possible. No longer are their periods of weeks or months before updates are sent to production.

Click image for full size

Slide 12: Continuous integration, deployment, monitoring

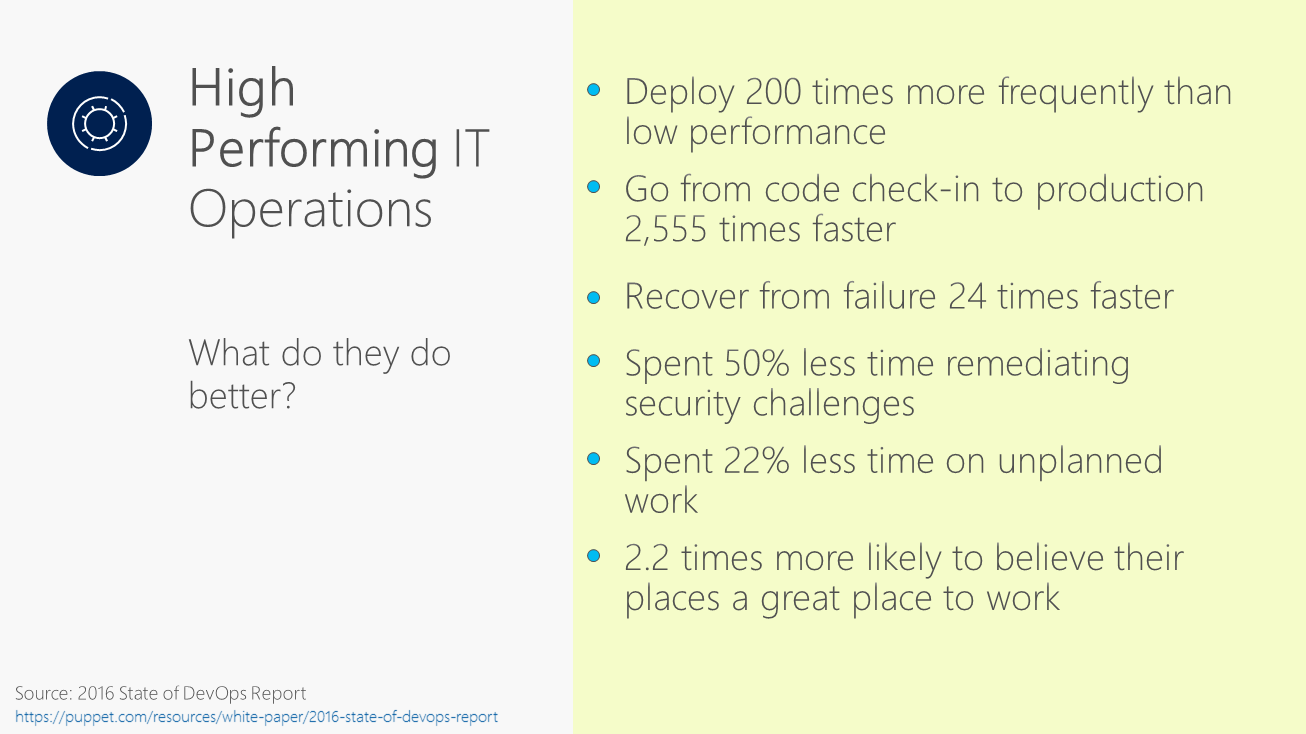

So now we get to the real reason that containerization is important – economics The benefits are many and varied The referenced report in the slide below provides detailed information There is a clear relationship between an optimized application delivery mechanism with customer and employee satisfaction This should come as no surprise.

The ultimate benefit for a business is that it lowers costs and increases revenue. It is easy to measure lower costs through reduction in the cost of supporting IT infrastructure. More difficult to measure is customer acquisition, customer retention, and customer satisfaction. Many of the Fortune 1000 companies now consider themselves software companies.

The ability to monetize data, make predictions and taking actions based on machine learning algorithms, are playing an increasing role in modern business. The competitive advantage of a company is rooted in their application development and delivery capabilities. Adapting quickly to a competitive or business landscape is crucial.

Click image for full size

Slide 13: High-performing IT operations

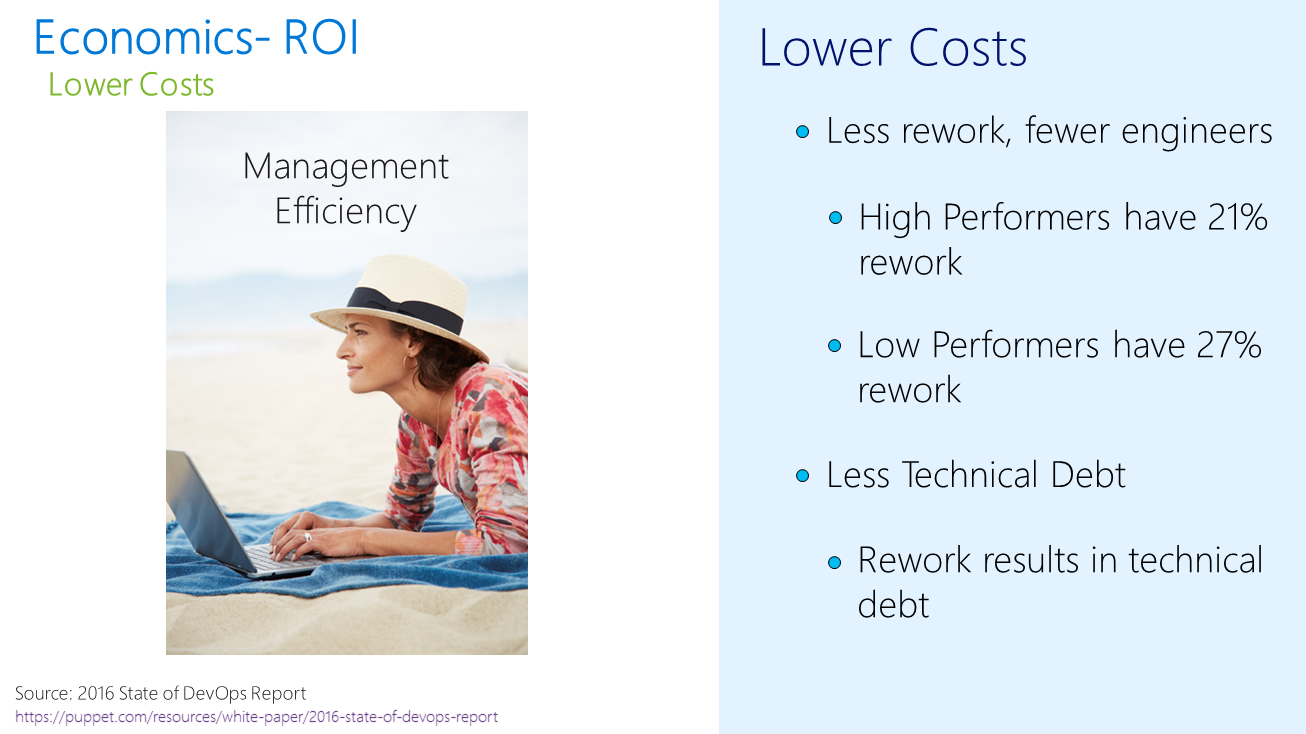

High performing IT operations reduce the amount of unnecessary work, due to failures in the application delivery process. In addition they accrue less technical debt, meaning that software rewrites do not compromise the architectural integrity of applications. Often times, companies spontaneously apply last-minute changes to the software to reduce downtime in production. Scrambling to fix software under pressure often results in architectural decay, also know as technical debt.

Click image for full size

Slide 14: Lowering the cost of IT

Application failure is extremely expensive It could mean that a website is unable to accept orders from customers It could mean a disruption of the supply chain pipeline In worst case scenarios, it can threaten human life, such as in medical care scenarios More abstract is the damage caused to company brand, due to frustrated customers.

Click image for full size

Slide 15: Avoiding application failure at all costs

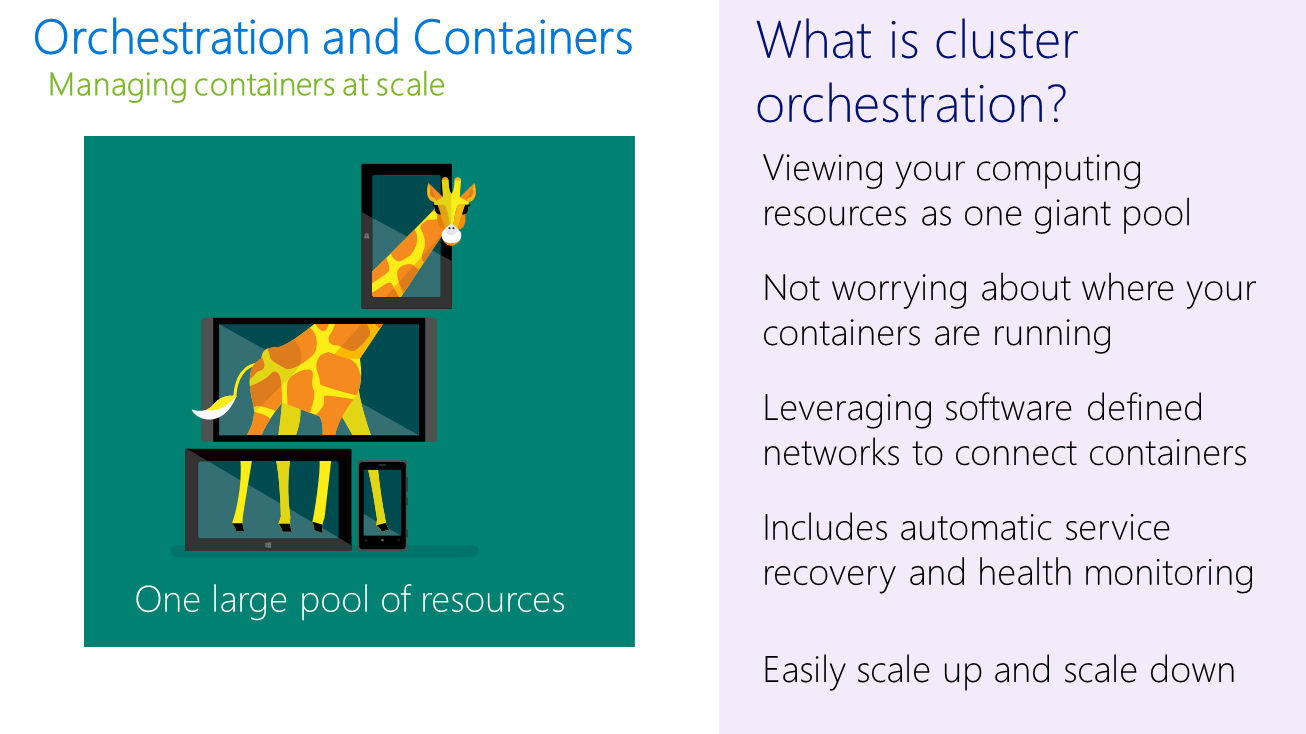

Some organizations run tens of thousands of containers at the same time. When operating at that scale, it is impossible to concern yourself with individual virtual machines and directing containers to run on them. Instead, it is important to look at your computing infrastructure is one giant pool of compute, networking, storage, and memory. That is what a cluster orchestrator enables.

Click image for full size

Slide 16: Orchestrating containers

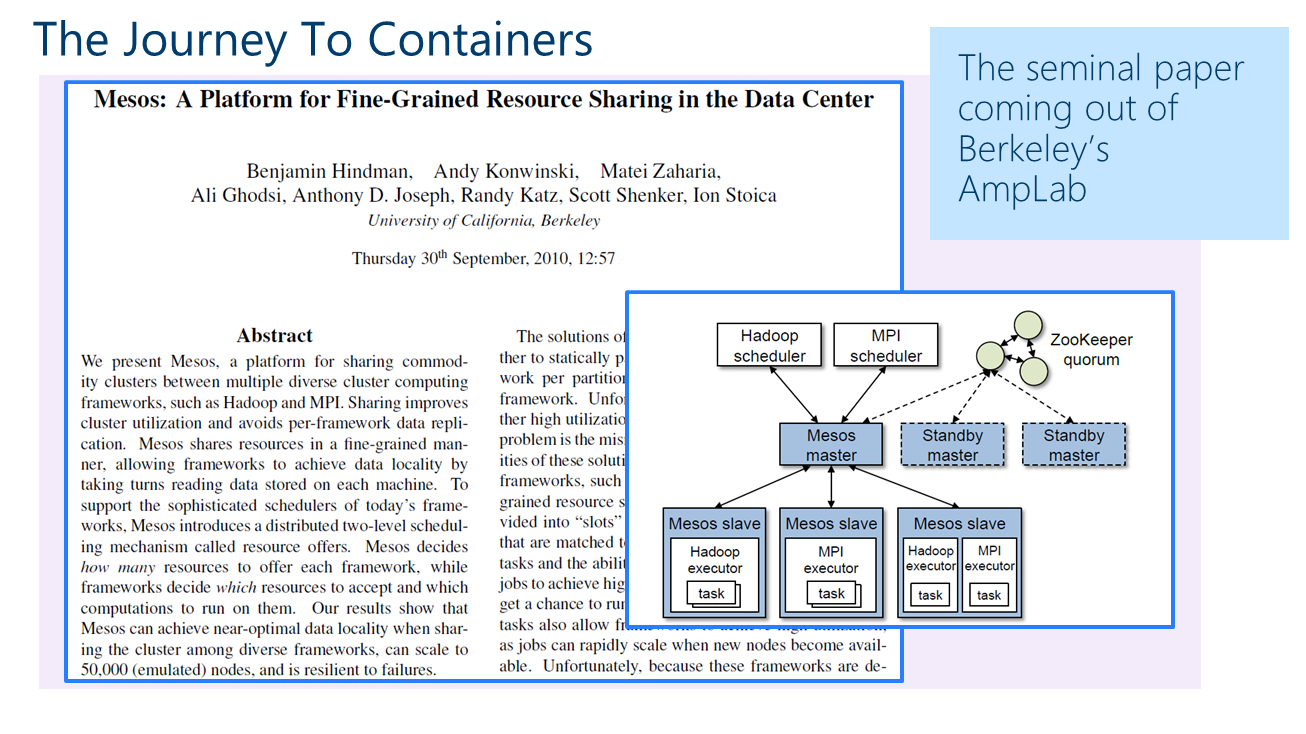

This technology got started at around 2008 There is very significant competition for companies in this space trying to capture mind share The notion of a cluster orchestrator really got started back in 2009, with the introduction of Apache Mesos AWS was quick to respond, but did so with a highly proprietary solution, locking you into their custom API.

Containers along with the associated applications are generally run inside of a cluster. Deploying and running containers on a cluster is the job of a cluster manager or orchestrator. The popular orchestration technologies today for running Docker containers include: (1) Mesos; (2) Docker Swarm; (3) Kubernetes. Of the three, Mesos has been around the longest. Kubernetes and Docker Swarm have only been around three years or less, while Mesos has been around since 2009. They are often described also as cluster managers. They are also described as cluster orchestrators. With varying degrees of completeness these cluster managers or orchestrators also provide the ability to scale, recover from failure, and treat a set of virtual machines as a single pool of compute resources.

Click image for full size

Slide 17: The history of orchestration

You are invited to go read this white paper put out in 2010. The work done at Berkeley inspired two big software companies to take advantage of this form of distributed computing. Both Databricks and Mesosphere were formed based on this work.

Click image for full size

Slide 18: The seminal paper that redefines distributed computing

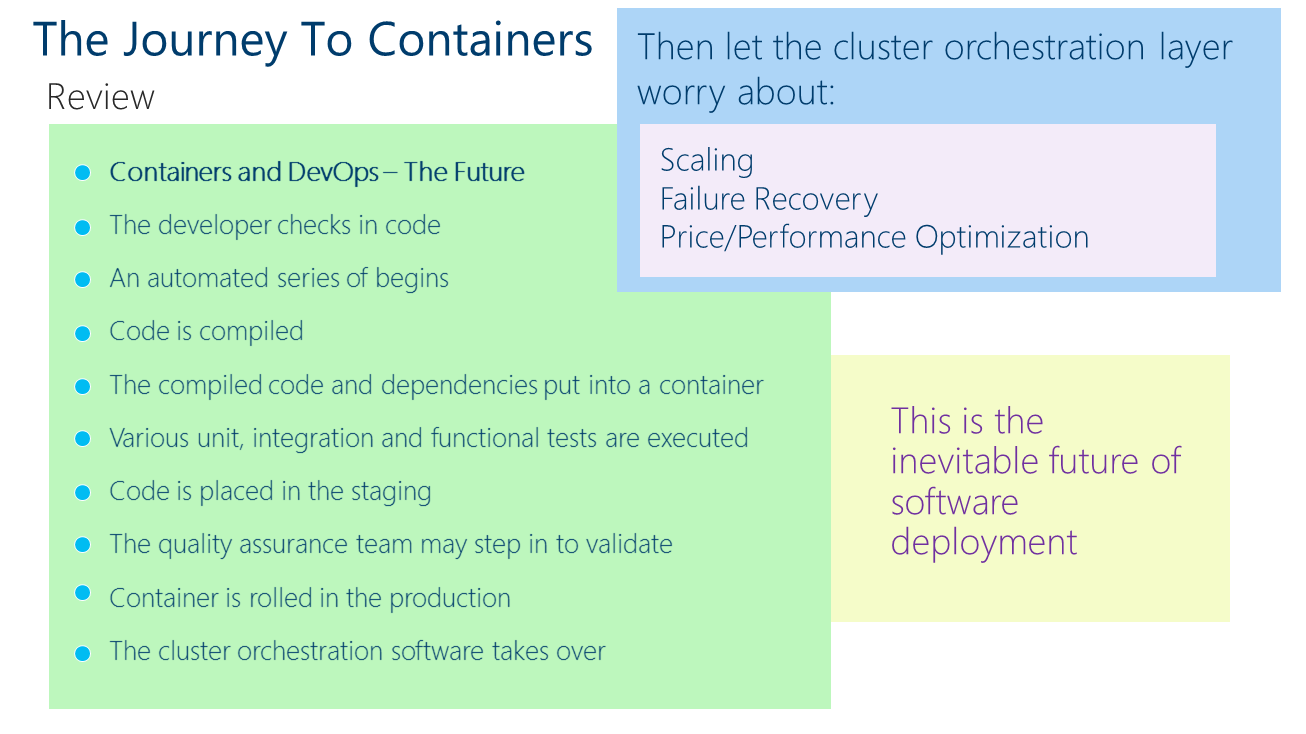

This slide encapsulates the discussions the main points that we addressed in this post. At the end of the day it’s really about getting quality code and applications in your production as frequently and as accurately as possible. Moreover, once these containers make it into production, they are running on an orchestrated cluster that manages the container and its applications lifecycle.

Click image for full size

Slide 19: Wrap-up and conclusion