Embracing Semantic Logging

UPDATE 4/25/2013: The Semantic Logging Application Block final version is released. Get it via NuGet.

UPDATE 2/14/2013: The Semantic Logging Application Block CTP is now available.

In the world of software engineering, every system needs to log. Logging helps to diagnose and troubleshoot problems with your system both in development and in production. This requires proper, well-designed instrumentation. All too often, however, developers instrument their code to do logging without having a clear strategy and without thinking through the ways the logs are going to be consumed, parsed, and interpreted. Valuable contextual information about events frequently gets lost, or is buried inside the log messages. Furthermore, in some cases logging is done simply for the sake of logging, more like a checkmark on the list. This situation is analogous to people fallaciously believing their backup system is properly implemented by enabling the backup but never, actually, trying to restore from those backups.

This lack of a thought-through logging strategy results in systems producing huge amounts of log data which is less useful or entirely useless for problem resolution.

Many logging frameworks exist today (including our own Logging Application Block and log4net). In a nutshell, they provide high-level APIs to help with formatting log messages, grouping (by means of categories or hierarchies) and writing them to various destinations. They provide you with an entry point – some sort of a logger object through which you call log writing methods (conceptually, not very different from Console.WriteLine(message)). While supporting dynamic reconfiguration of certain knobs, they require the developer to decide upfront on the template of the logging message itself. Even when this can be changed, the message is usually intertwined with the application code, including metadata about the entry such as the severity and entry id.

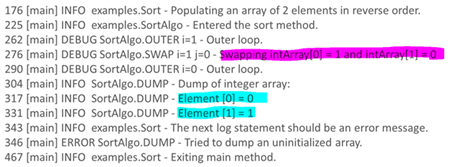

Thus, the discrete event payloads get flattened and the unstructured or semi-structured logging messages become the norm. Take a look at the following section of a typical log file:

This is what typical string-and-property-bag-based logging produces. While it may contain useful info such as the level (priority) of the log event and its source, the log message itself is totally flattened into a string. In order to parse it, knowledge of the format/template is required and that format/template must have been consistently applied in the application. This is especially true, if the log data needs to be post-processed (aggregated, filtered etc.) This pre-requisite knowledge gets lost if you are using unstructured logging frameworks today. The messages highlighted in pink and light blue demonstrate the compatibility problem, where you must actually know both templates. This problem is exacerbated when the developers are long gone; the IT/Pros are faced with the hassle of interpreting these kinds of messages, many of which don’t even use intuitive names.

Also, the code to generate log entries and make calls through logging framework facades, often pollutes the business logics and make the core code less readable.

Imagine another world, where the events get logged and their semantic meaning is preserved. You don’t lose any fidelity in your data. Welcome to the world of semantic logging. Note, some people refer to semantic logging as “structured logging” , “strongly-typed logging” or “schematized logging” .

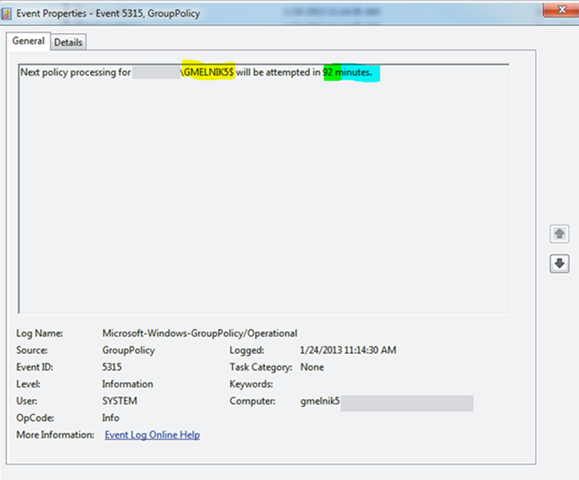

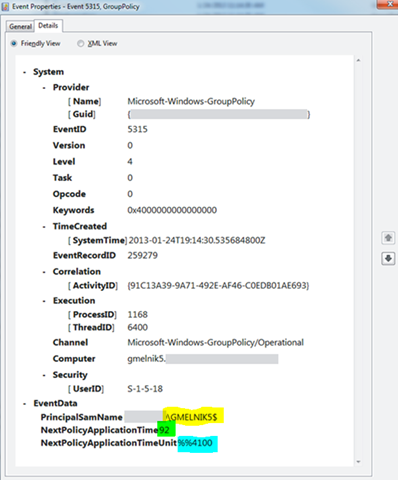

Take a look at the next example. Here I took an event from the Event Log.

It may appear that this is no different than the event message discussed earlier. This is because the General tab formats the event data into a human readable string. It flattens it, but it’s an artifact of the tool, and it only displays this way because I, the reader, chose to view it this way. Underneath the raw message in structured format is preserved. Switching to Details, I can see that along with some system metadata, I get the event specific discrete payload, which corresponds to the message displayed earlier (I used color highlighting to emphasize how different parts of the event got used to form the message):

We hit the hassle of dealing with unstructured logging when working on the Tailspin sample app for the Autoscaling Application Block (“Wasabi”) a year ago. All visualizations for monitoring (demoed here, starting 00:16:23) would have been so much simpler to implement, had we had semantic logging in place.

The technology to enable semantic logging in Windows has been around for a while (since Windows 2000). It’s called ETW – Event Tracing for Windows. It is a fast, scalable logging mechanism built into the Windows operating system itself. As Vance Morrison explains, “it is powerful because of three reasons:

1. The operating system comes pre-wired with a bunch of useful events

2. It can capture stack traces along with the event, which is INCREDIBLY USEFUL.

3. It is extensible, which means that you can add your own information that is relevant to your code.

I’m not going to get into the details of ETW and its architecture. This MSDN article does a good job with that. What I’d like to emphasize here is that ETW is based on a different logging paradigm where you must create a class representing an event (an ETW Event Provider). Historically, this has been a non-trivial task. It also involved foreign, unnatural to .NET developers concepts (like authoring manifests) and required tedious steps. This could be one of the reasons the ETW adoption was slow (our research confirms that).

To address this, the System.Diagnostics.Tracing.EventSource type was added in the .NET Framework 4.5. It makes writing ETW providers easier and much more natural to the way .NET developers typically work. In an nutshell, developers specify an EventSource subsclass, where each of its methods defines an event and its signature defines the event’s discrete payload. This separates the code that defines events from the code that raises the events, providing the application-specific instrumentation API. Events published through methods in an EventSource class are published to ETW, and there is also the possibility of adding other destinations for events using custom Event Listener implementations. Vance Morrison provides an in-depth explanation of what kind of problem the EventSource class is solving and how. I highly recommend you watch his video tutorial on generating your own events with EventSources and the companion blog entry.

While the EventSource provides a great idiomatic way for .NET developers to author ETW events, it doesn’t come with any of the familiar destinations for logging (rolling file, database, etc.). ETW uses its own destinations such as .etl files, and uses different tools for parsing and analyzing log data.

This is where the new Semantic Logging Application Block comes in – it makes it easier to incorporate the EventSource functionality and to manage the logging behavior of your system. It makes it possible to inherit most of the structure from the ETW pipeline, including event metadata and payload, to write log messages to multiple destinations, such as flat files, databases, or Windows Azure table storage, and to control what your system logs by setting filters and logging verbosity. Note, that the event flattening problem that I have described with the unstructured logging, may still be an issue when using the file-based destinations of the Semantic Logging Application Block. But the flattening does not occur where the logging request takes place, it occurs when the log message is written and that’s the core enabler of semantic logging.

We’ve designed the Semantic Logging Application Block for .NET developers. The block is intended to help you move from the traditional, unstructured logging approach (such as that offered by the Logging Application Block) towards the semantic logging approach offered by ETW. The Semantic Logging Application Block enables you to use the EventSource class and semantic log messages in your applications without moving away from the log formats you are familiar with. Importantly, you do not need to commit to how you consume events when developing business logic; you have a unified application-specific API for logging and then you can decide later whether you want those events to go to ETW or alternative destinations. In the future, you can easily migrate to a complete ETW-based solution without modifying your application code: you continue to use the same custom EventSource class, but use ETW tooling to capture and process your log messages instead of using the Semantic Logging Application Block event listeners.

If with a traditional logging infrastructure, such as that provided by the Logging Application Block, you might record an event exhibited in the previous example as shown in the following code sample:

myLogWriter.Write(

new LogEntry {

Severity = TraceEventType.Information,

EventId = 5315,

Message = string.Format(

CultureInfo.CurrentCulture,

EventResources.NextPolicyProcessing,

username,

nextAttemptTime,

nextAttemptTimeUnitName),

Categories = { "Policy Processing" }});

Using the Semantic Logging Application Block, you would record the same event by:

MyCompanyEventSource.Log.NextPolicyProcessing (username, nextAttemptTime, nextAttemptTimeUnit);

where the NextPolicyProcessing() is defined separately in the MyCompanyEventSource like so, for example:

[Event(5315, Level = EventLevel.Information, Message = "Next processing for {0} will be attempted in {1} {2}", Keywords = Keywords.PolicyProcessing)]

internal void NextPolicyProcessing(string username, int nextAttemptTime, TimeUnit nextAttemptTimeUnit)

{

...

}

Notice how with the Semantic Logging Application Block you are simply reporting the fact that some event occurred that you might want to record in a log. You do not need to specify an event ID, a priority, or the format of the message when you write the log message in your application code. This approach of using strongly typed events in your logging process provides the following benefits:

- You can be sure that you format and structure your log messages in a consistent way because there is no chance of making a coding error when you write the log message.

- It is easier to query and analyze your log files because the log messages are formatted and structured in a consistent manner.

- You can more easily parse your log data using some kind of automation.

- You can more easily consume the log data from another application. For example, in an application that automates activities in response to events that are recorded in a log file.

- It is easier to correlate log entries from multiple sources.

Of course, this requires you to author the MyCompanyEventSource class (subclass of the EventSource). But it makes sense for the developers to do so. It puts the effort in the right place: instead of putting all the effort in the parsing of the flat messages (and often failing at that), you put the effort into structuring your events properly in the first place. Besides, we are thinking of providing some additional aiding tools (snippets and/or FxCop rules) to make authoring of event methods even easier.

You’ll be able to use the Semantic Logging Application Block to create an out-of-process logger. This will help to reduce the logging overhead in your LOB applications because most of the processing of log messages takes place in a separate application. This is especially useful if you have a requirement to collect high volumes of trace messages from a production system. The out-of-process logger included with the Semantic Logging Application Block can also be dynamically reconfigured simply by editing a configuration file, enabling you, for example, to easily change the level of logging on the fly without touching your production system.

As we release the CTP of the Semantic Logging Application Block, we’ll demonstrate how to construct your own EventSources and route your logging to various destinations. This blog post is intended to describe the type of problem we are trying to solve and to entice you to think about the semantic logging paradigm.

In conclusion, I encourage you to read Vance Morrison’s tutorials and familiarize yourself with the EventSource. Start thinking about your logging in the new way. The Semantic Logging Application Block we are building is going to be a stepping-stone for you to get from a traditional, unstructured logging approach (such as that taken by the Logging Application Block or log4net), to a modern, semantic approach as provided by ETW.