Autoscaling Windows Azure applications

As I have previously announced, my team has been heads-down working on the new Windows Azure Integration Pack for Enterprise Library. Autoscaling came as one of the top-ranked stories for the pack (it is a frequently requested feature from the product group and it gathered a lot of votes on our backlog too). In this post, I’d like to provide an update on our thinking about the scenarios we are addressing and our implementation progress. Also, as you read this, please tell us what you think – validate our scenarios or make suggestions on what to revise – we are actively listening!

Why?

Why would you want to auto-scale? To take advantage of the elastic nature of the Windows Azure platform, you’d want your application to automatically handle changes in the load levels over time and to scale accordingly. This will help to meet necessary SLAs and also reduce the number of manual tasks that your application operator must perform, while staying on budget.

How?

We envision users to specify various autoscaling rules and then expect them to be enacted. We will provide a new block – the Autoscaling Application Block. It will contain mechanisms for collecting data, evaluating rules, reconciling rules and executing various actions across multiple targets.

Based on conversations with advisors, the primary persona for the Autoscaling Application block is the application operator, not the developer. We are constantly reminding ourselves of this when designing the ways for interacting with the block.

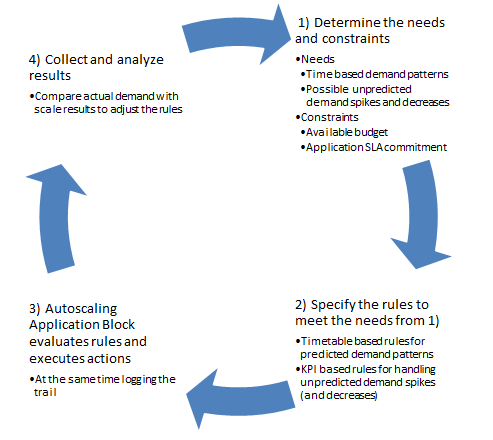

Importantly, the process of defining the rules is iterative as shown on the pic below.

The operation personnel will likely need to go through these stages multiple times to refine the rules.

Which rules are to be supported?

There are 2 types of rules we support:

1) constraint rules:

- are associated with a timetable; help respond to predicted increases/decreases of activities;

- proactively set limits on the minimum and maximum number of role instances in your Windows Azure application;

- help to guard your budget;

- have a rank to determine precedence if there are multiple overlapping rules;

- example: Rule with Rank 1 = Every last Friday of the month, set the range of the Worker Role A instances to be: min = 3, max = 8

2) reactive rules:

- are based on some KPI that can be defined for your app; KPIs can be defined using perf counters (e.g. CPU utilization) or business metrics (e.g. # of unprocessed purchase orders);

- reactively adjust the current count of role instances (by incrementing/decrementing the count by an absolute number or by a proportion) or perform some other action (action types are described below);

- help respond to unexpected bursts or collapses in your application’s workload and to meet your SLA.

A reactive rule is based on a metric (an abstract concept that you will monitor for). The block will collect data points (which are effectively metric values with corresponding timestamps) at a specific sampling rate, which you can configure. A user will use a KPI aggregate function (such as AVERAGE, MIN, MAX, SUM, COUNT, LAST, TREND etc over data points over a period of time) and a KPI goal to define a KPI. A reactive rule will specify what action needs to be taken based on whether the KPI goal is met or not. For example:

Metric : CPU usage Source: Worker Role A all instances Target : Worker Role A Data points: metric values [80%, 40%, 60%, 80%] collected every 10 secs (sampling rate) with corresponding timestamps; KPI Aggregate function: AVERAGE (Data points) for the past 1 hr KPI Goal: < 75% KPI 1 (user-named): If KPI Aggregate function for specific Source meets the KPI Goal, then KPI status = green Rule R1 (user-named): if KPI 1 status = red, then increment instance count of Target by 1 |

This is good, but users told us that they don’t want to necessarily focus on low level performance counters, but rather on some business KPI. Here’s an example of such a reactive rule. We intend to enable such specifications that use custom KPIs and custom actions:

Metric : Work orders waiting for processing Source : Application Target : Specified in the custom action Data points : [10,15,12,80,100,105,103] collected every 15 mins KPI Aggregate function : TREND (Data points) over the past 3 hrs KPI Goal : + 50% KPI 2 : If (KPI Aggregate Function (Source, Metric) > KPI Goal) , then KPI status = red, otherwise KPI status = green Rule R2 : If KPI 2 status = red, then do Custom Action C (scan the queue, provision another queue for work orders with “low” priority, and move all “low” priority work orders to that queue; listen on the main queue, when the queue clears move the “low” priority work orders back and de-provision the queue) |

Which actions are to be supported?

In the rule type descriptions, I mentioned instance scaling actions. They are not the only ones we plan to support. Here are various kinds of actions we plan to support:

- Instance Scaling. The Autoscaling Application Block varies the number of role instances in order to accommodate variations in the load on the application. Note that instance scaling actions can span multiple host services/subscriptions.

- Throttling. Instead of spinning off new instances, the block limits or disables certain (relatively) expensive operations in your application when the load is above certain thresholds. I envision you will be able to define various modi operandi for your app. In our sample application (survey management system), advanced statistical functionality for survey owners may be temporarily disabled when the CPU utilization across all worker roles is higher than 80%, and the maximum number of the worker roles has been reached.

- Notifying. Instead of performing instance scaling or throttling, the user may elect to simply receive notifications about the load situations with no automatic scaling taking place. The block will still do all monitoring, rule evaluation and determination of what adjustments need to be made to your Windows Azure application short of actually making those adjustments. It would be up to the user to review the notification and to act upon it.

- Custom action. In the spirit of the Enterprise Library extensibility, we provide extension hooks for you to plug-in your custom actions.

What data can be reacted upon?

We plan to enable the Autoscaling Application block to pull data from the following sources:

- Windows Azure Diagnostics tables (e.g. CPU utilization)

- Windows Azure Storage API (e.g. # unprocessed orders on a queue)

- Windows Azure Storage Analytics (such as transaction statistics and capacity data)

- application data store (your custom metric)

- real-time data sources instrumented in the app (e.g. # active users, # tenants, # documents submitted)

How will I configure the block?

Just like any other application block, the Autoscaling Application block can be configured both declaratively and programmatically.

There are actually two levels of configuration that you’ll need to do. One is the configuration of the block itself (e.g. where it should look for rules, how often to evaluate the rules, where to log, etc.) This is no different from configuring any other application block. Then, there’s configuration of the rules with corresponding actions, which are more like data managed by the operators (it/pros). With regard to declarative configuration, as a minimum, there will be an XML configuration file with a defined schema to get IntelliSense support when authoring the rules. We are also brainstorming other options, including PowerShell commandlets and basic SCOM integration.

Where will the block be hosted?

The Autoscaling Application block is a component, which you must host in a client. We envision that you will be able to use the block in different hosts:

- worker role

- windows service on-prem

- stand-alone app on-prem.

Our sample application implementation will use the block from a worker role. However, there’s nothing the block design that forces you to do so.

When will the Autoscaling Application Block be available?

Our plan is to ship this fall. We’ll be making regular Codeplex drops for you to inspect the block more closely and to provide feedback. Don’t wait too long – we are moving fast!

Final notes for today

This initial post gives you a quick overview of what’s coming in the Autoscaling Application block. In the future posts, I intend to address the following topics:

1) Multiple rule interaction

2) Scale groups

3) Kinetic stabilizer for dealing with the high frequency oscillation problem

4) Specifying custom business rules

5) Declarative configuration

6) Programmatic configuration

7) Autoscaling trace trail/history

8) Autoscaling visualizations

I’d like to conclude with an important note. To take advantage of the Autoscaling Application block, your application needs to be designed for scalability. There are many considerations. For example, a simple web role may not be scalable because it uses a session implementation that is not web farm friendly. I’ll try to touch on these considerations in the future posts. But for now remember, the Autoscaling Application block doesn’t automatically make your application scalable!