Deciphering Performance Data–CSV Volumes

I always feel so redundant when saying “CSV Volume”. It’s like saying “Cluster Shared Volume Volume”, but ah well, here we go.

Gathering performance data when it comes to CSV volumes can be confusing at first glance. The big issue that we have to understand is that multiple nodes and multiple VMs are hitting the same CSV disks from every possible direction. If looking at only a single node in your cluster, you will only get one small piece of the puzzle.

Today’s entry will begin the discussion very basic as we try to help explain what is going on and what you can do to see the kind of activity that’s happening on your CSV... umm Volumes. :)

The Tools

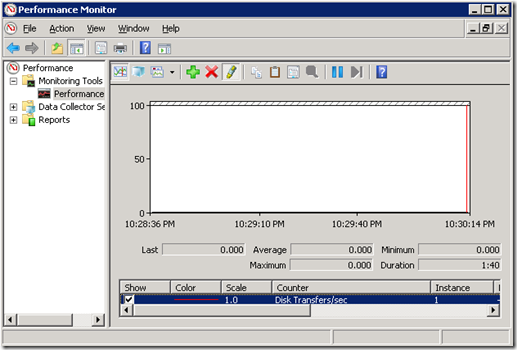

For this demo, I am going to be sticking with Performance Monitor to keep things very simple.

In Perfmon, we will be looking primarily at the Physical Disk Object, Disk Transfers/sec which will allow us to simply see the total of Disk Reads and Writes that are going on. Break down to Disk Reads/sec and Disk Write/sec for specifics, but as said, keeping this one simple.

My setup is as follows:

- 2 Hyper-V Cluster Nodes running Windows Server 2008 R2 Service Pack 1

- 1 CSV Volume (on iSCSI for simplicity’s sake)

- 4 Network connections, iSCSI, Client/Management, Cluster/CSV, Live Migration (no matter how many NICs you have on these HAVM clusters, it’s never enough!)

- 2 VMs each running Windows Server 2008 R2 ‘doing stuff’

- 1 stand-alone Windows Server 2008 R2 hosting my iSCSI targets

The Test

First off, thank you to Cristian Edwards for opening my eyes to the scenario we are about to see!

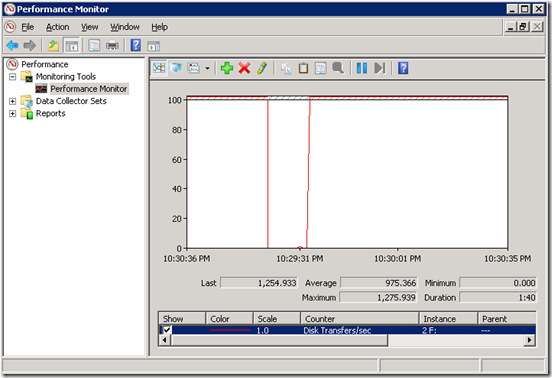

In the first test, we are going to load up the Disk Transfer/sec counter in Perfmon on each cluster node and on the storage server. We will then move one of my VMs to each of the two cluster nodes. When ready, the VMs one at-a-time will start doing work. We will then check to see what the disk counter is telling us on the cluster nodes and compare it to what we see on the storage server machine. Logic would tell us that adding up the I/O of the two cluster nodes should be close to equal to what we see on the storage server.

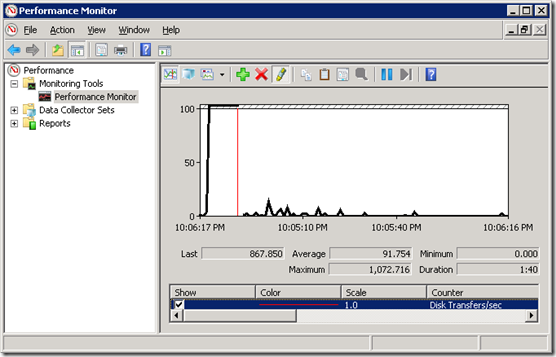

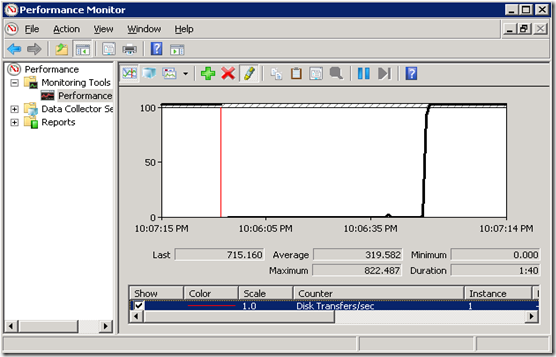

In the first two images, you can see where I kicked off some work inside of VM1, and near the same time, I kick off work inside of VM2. VM1 is hitting around 867 IOPs with VM2 sitting around 715.

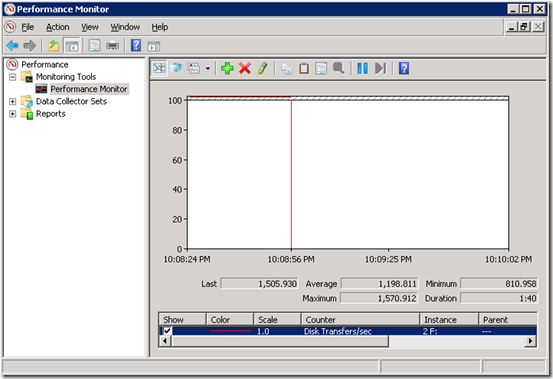

Moving down to our storage server, in this case my Microsoft iSCSI software target, we see that the disk is getting 1505 IOPs per second. Pretty much the sum total of these two VMs hitting the disk at the same time.

Suffice it to say, if you are looking at only one node in your Hyper-V cluster, you are only going to see what is happening on that single node and miss whatever any other cluster node is doing to the storage. If you have storage performance tools, that is likely the best way to see the actual performance of the underlying storage.

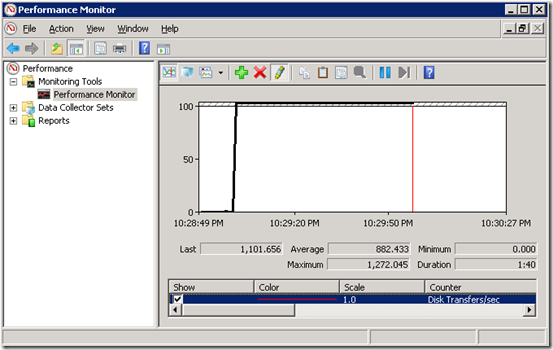

Now lets try something different. I am going to enable ‘redirected I/O’ on the CSV and see what happens when we restart the test. The CSV volume is currently hosted by cluster node 1 which means that all of the IO from node 2 should be getting routed through node 1 during this test.

This is very interesting! I started doing work on VM1, and as expected, we see the I/O shooting up. I then started doing the same work as before in VM2, but we do not see any I/O happening on the second node of the cluster. Interesting though, it appears that the I/O registering on the first cluster node is showing an increase from the first test. It is now registering I/O from both cluster nodes where cluster node 2 does not show anything at all.

On the storage server, we are able to confirm the above findings as the I/O is matching cluster node 1.

As can be seen, collecting data for CSV volumes is a puzzle. Without having all of the pieces it can be confusing to tell what you are looking at. In this very controlled environment, it is easy to see. When we are dealing with many more nodes hitting a SAN that is also being used by any number of other servers in a large enterprise environment, and then slap on top the fact that we have VMs spread out everywhere doing any range of work on the CSV volumes, this can become more complicated. Hopefully, in this blog and future ones, I will be able to help in keeping track of your I/O!

Keep in mind that there are CSV specific counters that can also be used to view ‘redirected’ I/O, but will have to save that for next time.

More tk…